Medical Education

Session: Medical Education 10

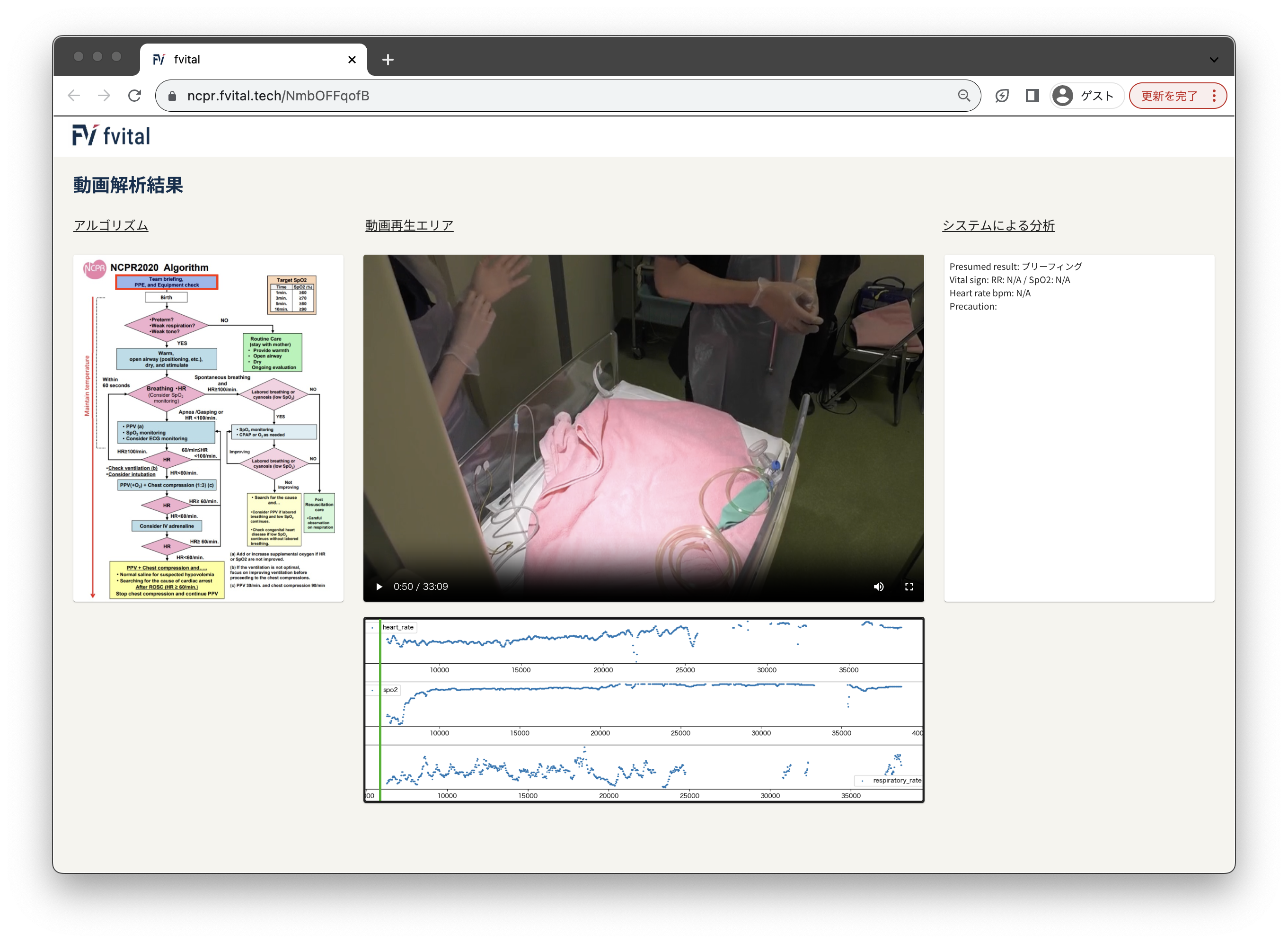

518 - Deep-learning-based automatic evaluation of neonatal resuscitation in clinical settings

Monday, May 6, 2024

9:30 AM - 11:30 AM ET

Poster Number: 518

Publication Number: 518.3197

Publication Number: 518.3197

Reika Fujimura, MEng (she/her/hers)

Data Scientist

Fvital Inc.

Kitchener, Ontario, Canada

Presenting Author(s)

Background: Neonatal resuscitation plays an essential role in newborn survival, appropriately addressing birth asphyxia. While the world has experienced a remarkable improvement in neonatal mortality rate in the past decades, the progress remains uneven worldwide.

Alongside the development of new talent, retraining existing personnel is a crucial challenge, increasing the demands for support for the continuous improvement and maintenance of professional skills. In an ideal scenario, every resuscitation situation could be utilized for skill maintenance, involving feedback from experienced trainers. However, the scarcity of available trainers hinders such an opportunity.

To tackle this issue, we believe that a widely accessible AI-based feedback system will be a significant breakthrough, taking full advantage of opportunities to sustain and enhance one's skills.

Objective: This study introduces an end-to-end automatic video analysis system designed to promote self-reflection based on videos recorded in the clinical settings. We evaluate the performance of the video analysis system by calculating the accuracy of scene detection results on the important events defined in the neonatal resuscitation algorithm, and we also discuss the usability.

Design/Methods: In this study, we used video and clinical recordings of neonatal birth resuscitation for 11 children born between 2022 and 2023 in the National Center for Child Health and Development (NCCHD) in Tokyo, Japan. A deep-learning-based multi-step model was developed to automatically detect events based on features extracted from the videos.

Results: Using the results of object detection and clinician’s hand tracking, our model was able to recognize some of the main resuscitation scenes with high accuracy, including birth and drying (98%), CPAP (continuous positive airway pressure) (79%), and laryngoscopy (71%). Our results indicated that the model can detect other events such as auscultation, stimulation, manual ventilation and suction, with lower accuracy. Using the results obtained from our scene detection model, a dedicated application was developed for practical scenarios.

Conclusion(s): We have shown that our system successfully learns to identify some of the important events in neonatal resuscitation with high accuracy, even using a small dataset. Our work advocates for expanding professional training opportunities, ultimately working towards a world where every newborn can receive high-quality clinical care.