Medical Education

Session: Medical Education 1

524 - Utilizing Simulation-based Training to Institute a Competency-Based Interviewing Approach

Friday, May 3, 2024

5:15 PM - 7:15 PM ET

Poster Number: 524

Publication Number: 524.444

Publication Number: 524.444

- SS

Simranjeet S. Sran, MD, MEd, CHSE, FAAP (he/him/his)

Director of Education, Simulation Program

Children's National Hospital

Washington, District of Columbia, United States

Presenting Author(s)

Background: The AAMC and ACGME promote a holistic review of applicants for program admissions. Behavioral interviews and the Multiple Mini Interview (MMI) are common practices as part of this process. However, interviews can also be a source of inequity and bias in candidate selection. This is exacerbated in situations where programs have limited resources that prohibit multiple interviews per candidate. Competency-based interviewing (CBI) can mitigate bias by having the interviewer focus on objective, established competencies rather than subjective impressions of a candidate. We developed a simulation-based CBI training for faculty with pre-recorded standardized interviews to assess this approach.

Objective: Measure the effectiveness of a simulation-based training in improving faculty comfort with competency-based interviewing and effects on rating concordance and accuracy.

Design/Methods: The simulation training consisted of pre-recorded interviews, each scripted to show strength in different combinations of nine AAMC Core Competencies for Entering Medical Students, followed by semi-structured debriefs. Faculty were asked to rate simulated applicants as exhibiting or not exhibiting strength in each competency, simply explained on a rubric. One simulated interview was viewed prior to the training and then repeated at the completion of the training, with faculty submitting pre- and post-training rubrics. Faculty also completed a retrospective pre-then-post survey to assess comfort with CBI.

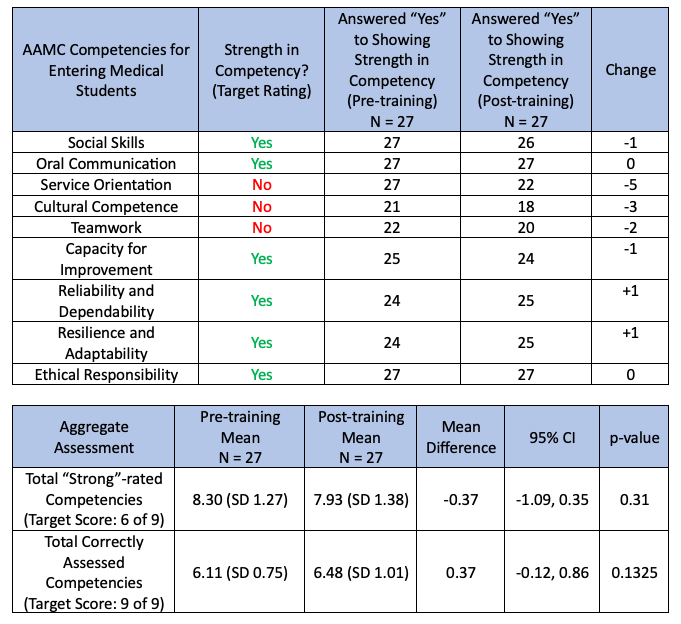

Results: The training was completed by 32 interprofessional medical school faculty, with 27 submitting pre- and post-rubrics. Faculty reported significantly increased comfort with CBI after the training (Figure 1), with a mean score of 3.75 out of 4 (mean difference 0.71, 95% CI [0.45,0.97], p < 0.0001). Faculty also rated the ease of using a competency-based rubric as 3.46 out of 4 compared to their experience with a previous non-CBI rubric rated as 2.95 (mean difference 0.51, 95% CI [0.08,0.94], p = 0.0215). Concordance of ratings between faculty for individual competencies, total number of competencies, and rubric score (compared to target score) is presented in Figure 2. There was high concordance pre- and post-training, though with a mean higher than the target score. After training, accuracy compared to the target rubric improved.

Conclusion(s): Simulation-based training is effective in promoting the implementation of CBI. A single training session significantly improves faculty comfort with this approach and a competency-based rubric. There is high concordance between faculty ratings, and improved accuracy after training.

.png)